Social media as information warfare

By Lt. Col. Jarred Prier, U.S. Air Force

The adaptation of social media as a tool of modern warfare should not be surprising. Internet technology evolved to meet the needs of information-age warfare around 2006 with the dawn of Web 2.0, which allowed internet users to create content instead of just consuming online material. Now, individuals could not only decide what was important and read only that, on demand, but they could also use the medium to create news based on their opinions. The social nature of humans ultimately led to virtual networking. As such, traditional forms of media were bound to give way to a more tailorable form of communication. United States adversaries were quick to find ways to exploit the openness of the internet, eventually developing techniques to employ social media networks as a tool to spread propaganda. Social media creates a point of injection for propaganda and has become the nexus of information operations and cyber warfare. To understand this, we must examine the important concept of the social media trend and look briefly into the fundamentals of propaganda. Also important is the spread of news on social media, specifically, the spread of “fake news” and how propaganda penetrates mainstream media outlets.

Twitter, Facebook and other social media sites employ an algorithm to analyze words, phrases or hashtags to create a list of topics sorted in order of popularity. This “trend list” is a quick way to review the most discussed topics at a given time. According to “Trends in Social Media: Persistence and Decay,” a 2011 study conducted at Cornell University, a trending topic “will capture the attention of a large audience for a short time” and thus “contributes to agenda setting mechanisms.” Using existing online networks in conjunction with automatic bot accounts (autonomous programs that can interact with computer systems or users), agents can insert propaganda into a social media platform, create a trend, and rapidly disseminate a message faster and cheaper than through any other medium. Social media facilitates the spread of a narrative outside a particular social cluster of true believers by commanding the trend.

- a message that fits an existing, even if obscure, narrative

- a group of true believers predisposed to the message

- a relatively small team of agents or cyber warriors

- a network of automated bot accounts

The existing narrative and the true believers who subscribe to it are endogenous, so any propaganda must fit that narrative to penetrate their network. Usually, the cyber team is responsible for crafting the specific message for dissemination. The cyber team then generates videos, memes or fake news, often in collusion with the true believers. To effectively spread the propaganda, the true believers, the cyber team and the bot network combine to take command of the trend. Thus, an adversary can influence the population using a variety of propaganda techniques, primarily through social media combined with online news sources and traditional forms of media.

Twitter makes real-time idea and event sharing possible on a global scale. A trend can spread a message to a wide group outside someone’s typical social network. Moreover, malicious actors can use trends to spread a message using multiple forms of media on multiple platforms, with the ultimate goal of garnering coverage in the mainstream media. Command of the trend is a powerful method of spreading information whereby, according to a February 2017 article in The Guardian, “you can take an existing trending topic, such as fake news, and then weaponize it. You can turn it against the very media that uncovered it.” Because Twitter is an idea-sharing platform, it is very popular for rapidly spreading information, especially among journalists and academics; however, malicious users have also taken to Twitter for the same benefits in recent years. At one time, groups like al-Qaida preferred creating websites, but now “Twitter has emerged as the internet application most preferred by terrorists, even more popular than self-designed websites or Facebook,” Gabriel Weimann notes in his book, Terrorism in Cyberspace: The Next Generation.

Three methods help control what is trending on social media: trend distribution, trend hijacking and trend creation. The first method is relatively easy and requires the least amount of resources. Trend distribution is simply applying a message to every trending topic. For example, someone could tweet a picture of the president with a message in the form of a meme — a stylistic device that applies culturally relevant humor to a photo or video — along with the unrelated hashtag #SuperBowl. Anyone who clicks on that trend list expecting to see something about football will see that meme that has nothing to do with the game. Trend hijacking requires more resources in the form of either more followers spreading the message or a network of bots designed to spread the message automatically. Of the three methods to gain command of the trend, trend creation requires the most effort. It necessitates either money to promote a trend or knowledge of the social media environment around the topic and, most likely, a network of several automatic bot accounts. In 2014, Twitter estimated that only 5% of its accounts were bots; that percent now tops 15%. Some of the accounts are “news bots,” which retweet trending topics. Some of the accounts are for advertising purposes, which try to dominate conversations to generate revenue through clicks on links. Some bots are trolls, which, like a human version of an online troll, tweet to disrupt civil conversation.

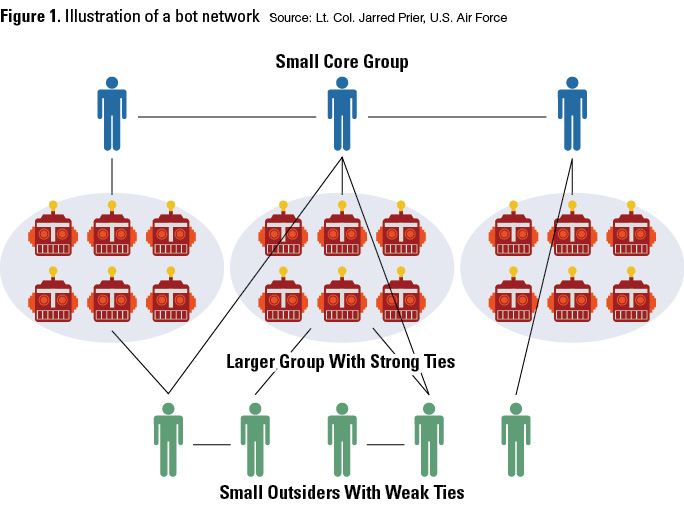

For malicious actors seeking to influence a population through social media trends, the best way is to build a network of bot accounts programmed to tweet at various intervals, respond to certain words or retweet when directed by a master account. Figure 1 illustrates the basics of a bot network. The top of the chain is a small core group. That team is composed of human-controlled accounts with a large number of followers. The accounts are typically adversary cyber warriors or true believers with a large following. Under the core group is the bot network. Bots tend to follow each other and the core group. Below the bot network is a group consisting of the true believers without a large following. These human-controlled accounts are a part of the network, but they appear to be outsiders because of the weaker links between the accounts. The bottom group lacks a large following, but they do follow the core group, sometimes follow bot accounts, and seldom follow each other.

Enough bots working together can quickly start a trend or take over a trend, but bot accounts themselves can only bridge the structural hole between networks, not completely change a narrative. To change a narrative — to conduct an effective influence operation — requires a group to combine a well-coordinated bot campaign with essential elements of propaganda.

For propaganda to function, it needs a previously existing narrative to build upon, as well as a network of true believers who already buy into the underlying theme. Social media helps the propagandist spread the message through an established network. A person is inclined to believe information on social media because the people he chooses to follow share things that fit his existing beliefs. That person, in turn, is likely to share the information with others in his network, with others who are like-minded, and with those predisposed to the message. With enough shares, a particular social network accepts the propaganda storyline as fact. But up to this point, the effects are relatively localized.

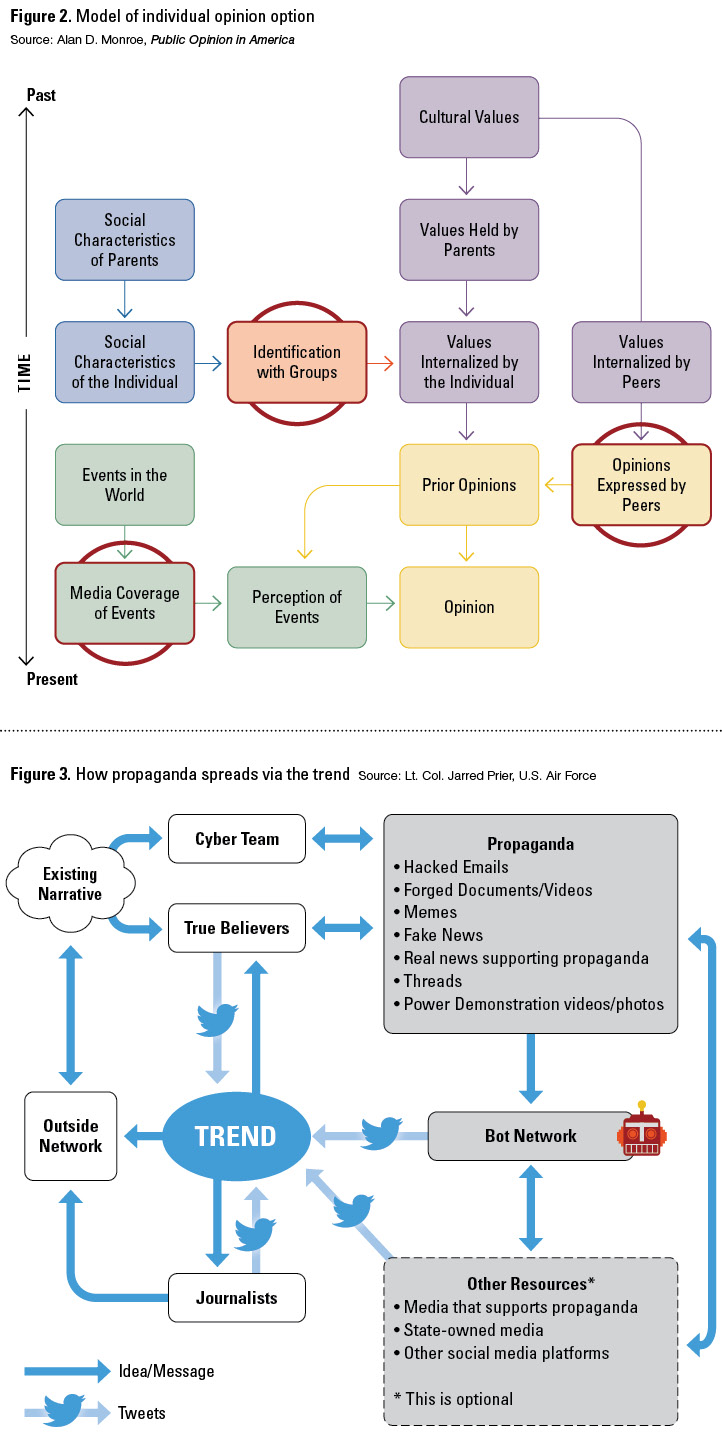

The most effective propaganda campaigns are not confined just to those predisposed to the message. Essentially, propaganda permeates everyday experiences, and those targeted with a massive media blitz will never fully understand that the ideas they have are not entirely their own. A modern example of this phenomenon was observable during the Arab Spring as propaganda spread on Facebook “helped middle-class Egyptians understand that they were not alone in their frustration,” Thomas Rid writes in Cyber War Will Not Take Place. In short, propaganda is simpler to grasp if everyone around a person seems to share the same emotions on a particular subject. In other words, propaganda creates heuristics, which is a way the mind simplifies problem solving by relying on quickly accessible data. In Thinking, Fast and Slow, Daniel Kahneman explains that the availability heuristic weighs the amount and frequency of information received, as well as the recentness of the information, as more valuable than the source or accuracy of the information. Essentially, the mind creates a shortcut based on the most — or most recent — information available, simply because it can be remembered easily. The lines in Figure 2 show the formation of opinions temporally, with bold arrows influencing a final opinion more than light arrows. The circled containers indicate a penetration point for propaganda exploitation. As previously described, mass media enables the rapid spread of propaganda, which feeds the availability heuristic. The internet makes it possible to flood the average person’s daily intake of information, which aids the spread of propaganda.

One of the primary principles of propaganda is that the message must resonate with the target. When people are presented with information that is within their belief structure, their bias is confirmed and they accept the propaganda. If it is outside of their network, they may initially reject the story, but the volume of information may create an availability heuristic. Over time, the propaganda becomes normalized and even believable. It is confirmed when a fake news story is reported by the mainstream media, which has become reliant on social media for spreading and receiving news. Figure 3 maps the process of how propaganda can penetrate a network that is not predisposed to the message. This outside network is a group that is ideologically opposed to the group of true believers. The outside network is likely aware of the existing narrative but does not necessarily subscribe to the underlying beliefs that support the narrative.

Command of the trend enables the contemporary propaganda model to create a “firehose of information” that always permits the insertion of false narratives. Trending items produce the illusion of reality, in some cases even being reported by journalists. Because untruths can spread so quickly, the internet has created “both deliberate and unwitting propaganda” since the early 1990s through the proliferation of rumors passed as legitimate news, according to Garth Jowett and Victoria O’Donnell in Propaganda & Persuasion. The normalization of these types of rumors over time, combined with the rapidity and volume of new false narratives over social media, opened the door for fake news.

The availability heuristic and the firehose of disinformation can slowly alter opinions as propaganda crosses networks by way of the trend, but the amount of influence will likely be minimal unless it comes from a source that a nonbeliever finds trustworthy. An individual may see the propaganda but still not buy into the message without turning to a trusted source of news to test its validity.

Social networks and social media

Social networks and social media

As social media usage has become more widespread, users have become ensconced within specific, self-selected groups, which means that news and views are shared nearly exclusively with like-minded users. In network terminology, this group phenomenon is called homophily. More colloquially, it reflects the concept that “birds of a feather flock together.” Homophily within social media creates an aura of expertise and trustworthiness where those factors would not normally exist. People are more willing to believe things that fit into their worldview. According to Jowett and O’Donnell, once source credibility is established in one area, there is a tendency to accept that source as an expert on other issues as well, even if the issue is unrelated to the area of originally perceived expertise. Ultimately, Tom Hashemi writes in a December 2016 article for the War on the Rocks website, this “echo chamber” can promote a scenario in which your friend is “just as much a source of insightful analysis on the nuances of U.S. foreign policy towards Iran as regional scholars, arms control experts, or journalists covering the State Department.”

If social media facilitates self-reinforcing networks of like-minded users, how can a propaganda message traverse networks where there are no overlapping nodes? This link between networks is only based on that single topic and can be easily severed. Thus, to employ social media effectively as a tool of propaganda, an adversary must exploit a feature within the social media platform that enables cross-network data sharing on a massive scale: the trending topics list. Trends are visible to everyone. Regardless of who follows whom on a given social media platform, all users see the topics algorithmically generated by the platform as being the most popular topics at that particular moment. Given this universal and unavoidable visibility, “popular topics contribute to the collective awareness of what is trending and at times can also affect the public agenda of the community,” according to the Cornell University study. In this manner, a trending topic can bridge the gap between clusters of social networks. A malicious actor can quickly spread propaganda by injecting a narrative onto the trend list. The combination of networking on social media, propaganda and reliance on unverifiable online news sources introduces the possibility of completely falsified news stories entering the mainstream of public consciousness.

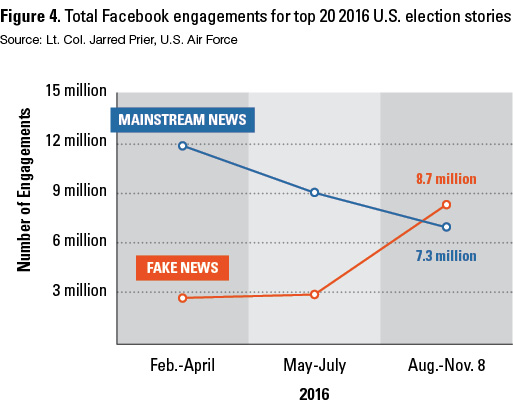

Fake news consists of more than just bad headlines, buried ledes or poorly sourced stories; it is a particular form of propaganda composed of a false story disguised as news. On social media, this becomes particularly dangerous because of the viral spread of sensationalized fake news stories. A prime example of fake news and social media came from the most shared news stories on Facebook during the 2016 U.S. presidential election. A story stating that the pope had endorsed Donald Trump for president received over 1 million shares on Facebook alone, not to mention Twitter, according to Buzzfeed. The source was a supposedly patriotic American news blog called Ending the Fed, a website run by Romanian businessperson Ovidiu Drobota. Fake news stories from that site and others received more shares in late 2016 than did traditional mainstream news sources (see Figure 4).

It is important to recognize that more people were exposed to those fake news stories than what is reflected in the “shares” data. Over time, those fake news sources become trusted sources for some people and as people learn to trust them, legitimate news outlets become less trustworthy.

Russia: masters of manipulation

Russia is no stranger to information warfare. The Soviet Union originally used the technique of aktivnyye meropriyatiya (active measures) and dezinformatsiya (disinformation). According to a 1987 State Department report on Soviet information warfare, “active measures are distinct both from espionage and counterintelligence and from traditional diplomatic and informational activities. The goal of active measures is to influence opinions and/or actions of individuals, governments, and/or publics.” In other words, Soviet agents would try to weave propaganda into an existing narrative to smear countries or individuals. Active measures are designed, as retired KGB Gen. Oleg Kalugin once explained, “to drive wedges in the Western community alliances of all sorts, particularly NATO, to sow discord among allies, to weaken the U.S. in the eyes of the people in Europe, Asia, Africa, Latin America, and thus to prepare ground in case the war really occurs.” Noted Russia analyst Michael Weiss says, “The most common subcategory of active measures is dezinformatsiya, or disinformation: feverish, if believable lies cooked up by Moscow Centre and planted in friendly media outlets to make democratic nations look sinister.”

Russia’s trolls have a variety of state resources at their disposal, including the assistance of a vast intelligence network. Additional available tools include RT (Russia Today) and Sputnik, Kremlin-financed television news networks broadcasting in multiple languages around the world. Before the trolls begin their activities on social media, the cyber-warrior hackers first provide hacked information to Wikileaks, which according to then-CIA director Mike Pompeo is a “nonstate hostile intelligence service abetted by state actors like Russia.” In intelligence terms, WikiLeaks operates as a “cutout” for Russian intelligence operations — a place to spread intelligence information through an outside organization — similar to the Soviets’ use of universities to publish propaganda studies in the 1980s. The trolls then take command of the trend, spreading the hacked information on Twitter, while referencing WikiLeaks and RT to provide credibility. These efforts would be impossible without an existing network of American true believers willing to spread the message. The Russian trolls and the bot accounts also amplified the voices of the true believers. Then, the combined effects of Russian and American Twitter accounts took command of the trend to spread disinformation across networks.

Division and chaos

One particularly effective Twitter hoax occurred as racial unrest fell on the University of Missouri campus. On the night of November 11, 2015, #PrayforMizzou began trending on Twitter as a result of protests over racial issues at the university (known colloquially as Mizzou) campus. However, “news” that the Ku Klux Klan (KKK) was marching through the campus and the adjoining city of Colombia started developing within the hashtag — altering its meaning — and shooting it to the top of the trend list. A user with the display name “Jermaine” (@Fanfan1911), warned residents, “The cops are marching with the KKK! They beat up my little brother! Watch out!” The tweet included a picture of a black child with a severely bruised face; it was retweeted hundreds of times. Jermaine and a handful of other users continued tweeting and retweeting images and stories of KKK and neo-Nazis in Columbia, chastising the media for not covering the racists creating havoc on campus.

An examination of Jermaine’s followers, and the followers of his followers, showed that the original tweeters all followed and retweeted each other, and were retweeted automatically by approximately 70 bots using the trend-distribution technique, which used all of the trending hashtags at that time within their tweets, not just #PrayforMizzou. Spaced evenly, and with retweets from real people who were observing the Mizzou hashtag, the numbers quickly escalated to thousands of tweets within a few minutes, including tweets from the Mizzou student body president and feeds from local and national news networks — taken in by the deception — supporting the false narrative. The plot was smoothly executed and evaded the algorithms Twitter designed to catch bot tweeting, mainly because the Mizzou hashtag was being used outside of that attack. The narrative was set as the trend was hijacked, and the hoax was underway.

Shortly after the disinformation campaign at Mizzou, @Fanfan1911 changed his display name from Jermaine to “FanFan” and the profile picture from that of a young black male to a German iron cross. For the next few months, FanFan tweeted in German about Syrian refugees and focused on messages that were anti-Islamic, anti–European Union, and anti-German Chancellor Angela Merkel, reaching a crescendo after reports of women being raped on New Year’s Eve 2016 by refugees from Muslim countries. Some of the reports were false, including a high-profile case of a 13-year-old ethnic-Russian girl living in Berlin who falsely claimed that she was abducted and raped by refugees.

Once again, Russian propaganda dominated the narrative. Similar to previous disinformation campaigns on Twitter, Russian trolls were able to spread disinformation by exploiting an underlying fear and an existing narrative. They used trend-hijacking techniques in concurrence with reporting by RT. To attempt to generate more attention in European media to Russia’s anti-Merkel narrative, Russian Foreign Minister Sergey Lavrov accused German authorities of a “politically correct cover-up” in the case of the Russian teen. Aided by the Russian propaganda push, the anti-immigration narrative began spreading across traditional European media.

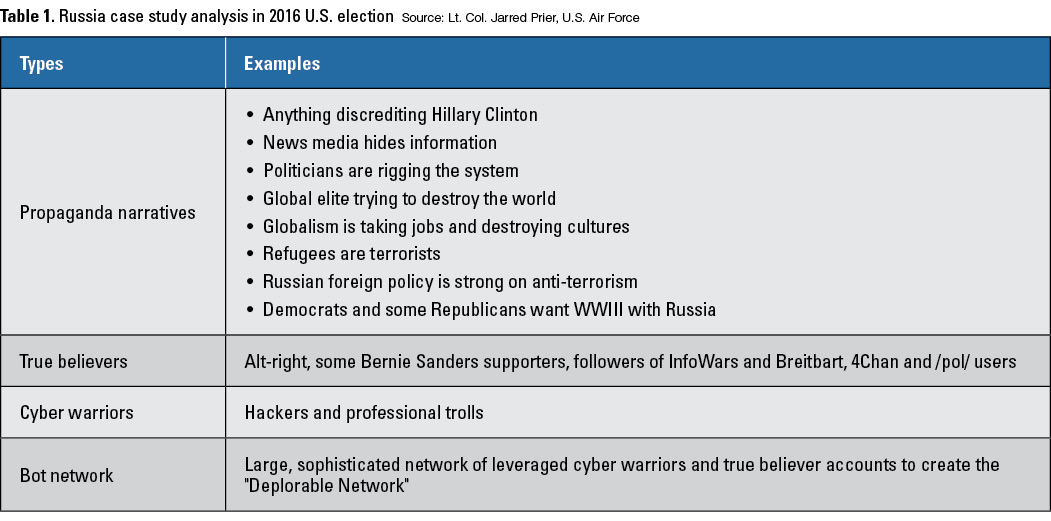

Influencing the 2016 presidential election

According to the U.S. Office of Director of National Intelligence (ODNI) report on Russian influence during the 2016 presidential election, “Moscow’s influence campaign followed a messaging strategy that blends covert intelligence operations — such as cyber activity — with overt efforts by Russian Government agencies, state funded media, third-party intermediaries, and paid social media users, or ‘trolls.’” Russian propaganda easily meshed with the views of “alt-right” networks and those of U.S. Sen. Bernie Sanders’ supporters on the left wing of the Democratic Party. In a September 2016 speech, candidate Hillary Clinton described half of candidate Trump’s supporters as a “basket of deplorables,” and said that the other half were just people who felt the system had left them behind, who needed support and empathy. The narrative quickly changed after Trump supporters began referring to themselves as “Deplorable” in their social media screen names.

Before the “deplorables” comment, the Russian trolls primarily used an algorithm to rapidly respond to a Trump tweet, with their tweets prominently displayed directly under Trump’s if a user clicked on the original. After the Clinton speech, a search on Twitter for “deplorable” was all one needed to suddenly gain a network of followers numbering between 3,000 and 70,000. Once again, FanFan’s name changed — this time to “Deplorable Lucy” — and the profile picture became a white, middle-aged female with a Trump logo at the bottom of the picture. FanFan’s followers went from just over 1,000 to 11,000 within a few days. His original network from the Mizzou and European campaigns changed as well: Tracing his follower trail again led to the same groups of people in the same network, and they were all now defined by the “Deplorable” brand. In short, they were now completely in unison with a vast network of other Russian trolls, actual American citizens, and bot accounts from both countries on Twitter, making it suddenly easier to get topics trending. The Russian trolls could employ the previously used tactics of bot tweets and hashtag hijacking, but now they had the capability to create trends.

Coinciding with the implementation of the strategy to mask anti-Trump comments on Twitter, WikiLeaks began releasing Clinton campaign chairman John Podesta’s stolen emails. The emails themselves revealed nothing truly controversial, but the powerful narrative created by a trending hashtag conflated Podesta’s emails with Clinton’s use of a private email server while she was secretary of state. Secondly, the Podesta email narrative took routine issues and made them seem scandalous. The most common theme: bring discredit to the mainstream media by distorting the stolen emails into conspiracies of attempted media “rigging” of the election to support Clinton. The corruption narrative also plagued the Democratic National Committee, which was hacked earlier in the year by Russian sources, according to the ODNI report, and then revealed by WikiLeaks. Another of Podesta’s stolen emails was an invitation to a party at the home of a friend that promised pizza from Comet Ping Pong pizzeria and a pool to entertain the kids. It was turned into a fake news conspiracy theory (#PizzaGate) inferring that the email was code for a pedophilic sex party. That influenced a man to go to Comet Ping Pong, armed with an AR-15 rifle, prepared to free children from an underground child sex trafficking ring.

Coinciding with the implementation of the strategy to mask anti-Trump comments on Twitter, WikiLeaks began releasing Clinton campaign chairman John Podesta’s stolen emails. The emails themselves revealed nothing truly controversial, but the powerful narrative created by a trending hashtag conflated Podesta’s emails with Clinton’s use of a private email server while she was secretary of state. Secondly, the Podesta email narrative took routine issues and made them seem scandalous. The most common theme: bring discredit to the mainstream media by distorting the stolen emails into conspiracies of attempted media “rigging” of the election to support Clinton. The corruption narrative also plagued the Democratic National Committee, which was hacked earlier in the year by Russian sources, according to the ODNI report, and then revealed by WikiLeaks. Another of Podesta’s stolen emails was an invitation to a party at the home of a friend that promised pizza from Comet Ping Pong pizzeria and a pool to entertain the kids. It was turned into a fake news conspiracy theory (#PizzaGate) inferring that the email was code for a pedophilic sex party. That influenced a man to go to Comet Ping Pong, armed with an AR-15 rifle, prepared to free children from an underground child sex trafficking ring.

The #PizzaGate hoax, along with other false and quasi-false narratives, became common within right-wing media as another indication of the immorality of Clinton and her staff. Often, the mainstream media would latch onto a story with an unsavory background and false pretenses, thus giving more credibility to fake news; however, the #PizzaGate hoax followed the common propaganda narrative that the media was trying to cover up the truth and that the government failed to investigate the crimes. The trend became so sensational that traditional media outlets chose to cover the Podesta email story, which gave credibility to the fake news and the associated online conspiracy theories promulgated by the Deplorable Network. The WikiLeaks release of the Podesta emails was the peak of Russian command of the trend during the 2016 election. Nearly every day #PodestaEmail trended as a new batch of supposedly scandalous hacked emails made their way into the mainstream press.

Based on my analysis, the bot network appeared to be between 16,000 and 34,000 accounts. The cohesiveness of the group indicates how a coordinated effort can create a trend in a way that a less cohesive network could not. To conduct cyber attacks using social media as information warfare, an organization must have a vast network of bot accounts to take command of the trend. With unknown factors, such as the impact of fake news, the true results of the Russian influence operation will likely never be known. As philosopher Jacques Ellul said, experiments undertaken to gauge the effectiveness of propaganda will never work because the tests “cannot reproduce the real propaganda situation.”

Adrian Chen, The New York Times reporter who originally uncovered the St. Petersburg troll network in 2015, went back to Russia in the summer of 2016. Russian activists he interviewed claimed that their purpose “was not to brainwash readers, but to overwhelm social media with a flood of fake content, seeding doubt and paranoia, and destroying the possibility of using the Internet as a democratic space.” The troll farm used similar techniques to drown out anti-Putin trends on Russian social media. A Congressional Research Service Study summarized the Russian troll operation succinctly in a January 2017 report: “Cyber tools were also used [by Russia] to create psychological effects in the American population. The likely collateral effects of these activities include compromising the fidelity of information, sowing discord and doubt in the American public about the validity of intelligence community reports, and prompting questions about the democratic process itself.”

For Russia, information warfare is a specialized type of war, and modern tools make social media a weapon. According to a former Obama administration senior official, Russians regard the information sphere as a domain of warfare on a sliding scale of conflict that always exists between the U.S. and Russia. This perspective was on display during the Infoforum 2016 Russian national security conference, where senior Kremlin advisor Andrey Krutskih compared Russia’s information warfare to a nuclear bomb, which would “allow Russia to talk to Americans as equals,” in the same way that Soviet testing of the atomic bomb did in 1949.

The future of weaponized social media

Smear campaigns have been around since the beginning of politics, but the novel techniques recently employed have gained credibility after the attacks trended on Twitter. The attacks, often under the guise of a “whistleblower” campaign, make routine political actions seem scandalous. Just like the Podesta email releases, several politicians and business leaders around the world have fallen victim to this type of attack.

Recall the 2015 North Korean hacking of Sony Studios. The fallout at the company was not because of the hacking itself, but from the release of embarrassing emails from Sony senior management, as well as the salaries of every employee. The uproar over the emails dominated social media, often fed by salacious stories like the RT headline: “Leaked Sony emails exhibit wealthy elite’s maneuvering to get child into Ivy League school.” Ultimately, Sony fired a senior executive because of the content of her emails. In another example from May 2017, nine gigabytes of email stolen from French presidential candidate Emmanuel Macron’s campaign were released online and verified by WikiLeaks. Subsequently, the hashtag #MacronLeaks trended to number one worldwide. This influence operation resembled the #PodestaEmail campaign with a supporting cast of some of the same actors. During the weeks preceding the French election, many accounts within the Deplorable Network changed their names to support Macron’s opponent, Marine LePen. These accounts mostly tweeted in English and still engaged in American political topics as well as French issues. Some of the accounts also tweeted in French, and a new network of French-tweeting bot accounts used the same methods as the Deplorable Network to take command of the trend.

In his book Out of the Mountains, David Kilcullen describes a future comprising large, coastal urban areas filled with potential threats, all connected. The implications are twofold. First, networks of malicious nonstate actors would be able to band together to hijack social media. Although these groups may not have the power to create global trends, they can certainly create chaos with smaller numbers by hijacking trends and creating local trends. With minimal resources, a small group can create a bot network to amplify its message. Second, scores of people with exposure to social media are vulnerable to online propaganda. In this regard, state actors can use the Russian playbook. Russia will likely continue to dominate this new battlespace. It has intelligence assets, hackers, cyber warrior trolls, massive bot networks, state-owned news networks with global reach, and established networks within the countries Russia seeks to attack via social media. Most importantly, the Russians have a history of spreading propaganda. After the 2016 U.S. elections, Russian trolls worked toward influencing European elections. They have been active in France, the Balkans and the Czech Republic using active measures and coercive social media messages, as Anthony Faiola describes in a January 2017 article for The Washington Post.

Conclusion

Propaganda is a powerful tool and, used effectively, it has been proven to manipulate populations on a massive scale. Using social media to take command of the trend makes the spread of propaganda easier than ever before for both state and nonstate actors.

Fortunately, social media companies are taking steps to combat malicious use. Facebook is taking action to increase awareness of fake news and provide a process for removing the links from the website. Twitter has started discreetly removing unsavory trends within minutes of their rise in popularity. However, adversaries adapt, and Twitter trolls have attempted to regain command of the trend by misspelling a previous trend once it is taken out of circulation.

The measures enacted by Facebook and Twitter are important for preventing future wars in the information domain. However, Twitter will also continue to have problems with trend hijacking and bot networks. As demonstrated by #PrayforMizzou, real events happening around the world will maintain popularity as well-intending users want to talk about the issues. Removing the trends function could end the use of social media as a weapon but doing so could also devalue the usability of Twitter. Rooting out bot accounts would have an equal effect since that would nearly eliminate the possibility of trend creation. Unfortunately, that would have an adverse impact on advertising firms that rely on Twitter to generate revenue for their products.

With social media companies attempting to balance the interests of their businesses and the betterment of society, other institutions must respond to the malicious use of social media. In particular, the credibility of our press has been put into question by social media influence campaigns. For instance, news outlets should adopt social media policies for their employees that discourage them from relying on Twitter as a source. This will require a culture shift within the press and, fortunately, it has gathered significant attention at universities researching the media’s role in influence operations. It is worth noting that the French press did not cover the content of the Macron leaks; instead, the journalists covered the hacking and influence operation without giving any credibility to the leaked information.

Finally, elected officials must move past the partisan

divide of Russian influence in the 2016 U.S. election. This involves two things: first, both political parties must recognize what happened — neither minimizing nor overplaying Russian active measures. Second, and most importantly, politicians must commit to not using active measures to their benefit. Certainly, the appeal of free negative advertising will make any politician think twice about using disinformation, but a foreign influence operation damages more than just the other party, it damages our democratic ideals. The late

U.S. Sen. John McCain summarized this sentiment well at a CNN Town Hall: “Have no doubt, what the Russians tried to do to our election could have destroyed democracy. That’s why we’ve got to pay … a lot more attention to the Russians.”

This was not the cyber war we were promised. Predictions of a catastrophic cyber attack dominated policy discussion, but few realized that social media could be used as a weapon against the minds of the population. Russia is a model for this future war that uses social media to directly influence people. As technology improves, techniques are refined and internet connectivity continues to proliferate around the world, this saying will ring true: He who controls the trend will control the narrative — and, ultimately, the narrative controls the will of the people.

Comments are closed.